SMITE-50 Dataset and Benchmark

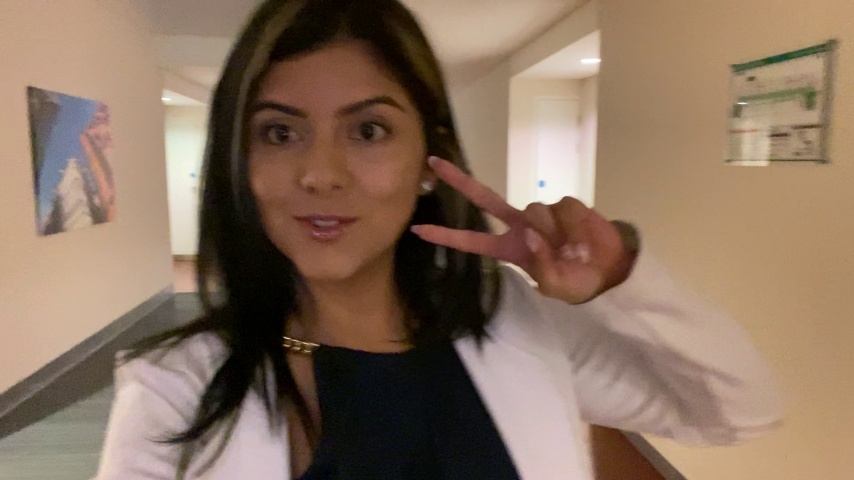

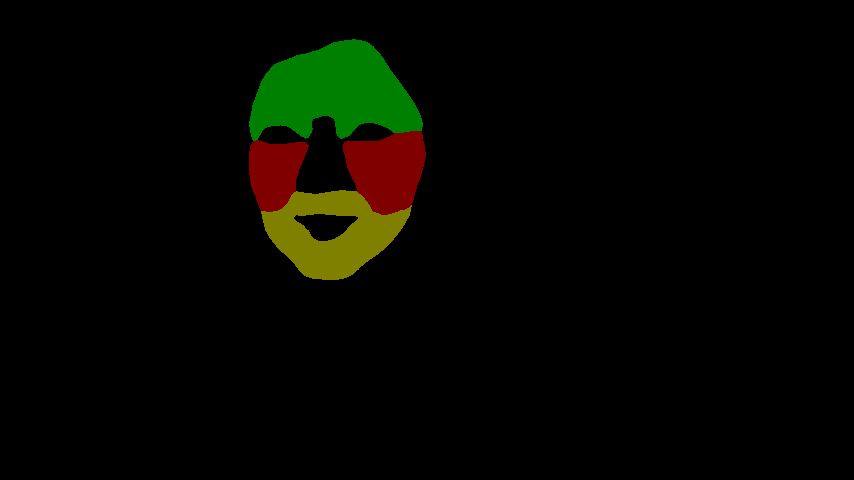

SMITE-50 is a video dataset designed for challenging segmentation tasks involving multiple

object

parts in difficult scenarios such as occlusion. It consists of 50 videos, each up to 20 seconds

long, with frame counts ranging from 24 to 400 and various aspect ratios (vertical and horizontal).

The dataset includes four main classes: “Horses,” “Faces,” “Cars,” and “Non-Text.” Videos in the

“Horses” and “Cars” categories, captured outdoors, present challenges like occlusion, viewpoint

changes, and fast-moving objects in dynamic backgrounds, while “Faces” involve occlusion, scale

changes, and fine-grained parts that are difficult to track and segment over time. The “Non-Text”

category includes videos with parts that cannot be described using natural language, making them

challenging for zero-shot video segmentation models that rely on textual vocabularies. Primarily

sourced from Pexels, SMITE-50 features multi-granularity annotations, focusing on Horses,

Human

Faces, and Cars, with a total of 41 videos. Each subset includes ten segmented reference images for

training and densely annotated videos for testing, with granularity varying from human eyes to

animal heads, relevant for applications like VFX. Additionally, nine videos feature segments that

cannot be described textually. The dataset includes dense annotations, with masks created for every

fifth frame and an average of six parts per frame across three granularity types. Compared to

PumaVOS, which has 8% dense annotations, SMITE-50 provides 20% dense annotations. Although

still a

work in progress, SMITE-50 aims to be publicly available in the future.